27 Mar How Hyperscale Assemblies Support the Future of Data Centers

Ever wonder how global tech giants like Google, Facebook, and YouTube can run their services seamlessly and globally? While we take those services for granted, the reality is that they would not be possible without a way to structure data centers to provide the same experience at the same time in Russia, Australia, Brazil, the United States, and practically every other country in the world. It requires colossal computing power, using hyperscale assemblies, with reliable redundancy and superior security to deliver these services consistently, all day, every day, everywhere.

Users of these services also drive the need for massive computing power. How can thousands of people stream the same videos and movies at the same time without interruptions? The Netflix experience needs to be as seamless as watching a movie on DVD.

Meet the new data center: the hyperscale computing center, which can process enormous amounts of data to meet the business requirements of tech firms and the expectations of their users. These hyperscale computing processes are made possible through custom hyperscale cable assemblies!

Innovations Driving Hyperscale in Data Centers

Three innovations drive the hyperscale data center:

- The Internet

- Cloud computing

- Big data

With the Internet, everything is now connected to everything else. This gives publishers and platforms instant global access and scale.

The cloud allows companies to save big money by retiring their physical on-site data centers or networks. Need more computing power? No problem, you can buy more from your cloud provider at a set price. Need less? Again, no problem, you can easily downgrade to a small-scale service.

For example, if a law firm takes on a big case and needs to add attorneys and support staff quickly, the firm doesn’t need to spend money on expensive servers, and doesn’t need to take the time to source, receive, configure and test the new hardware.

Cloud computing allows the firm to scale up quickly with a few keystrokes and mouse clicks. When the case is finished, the firm can quickly scale back without warehousing used equipment. The time and money saved is huge.

In recent history, data was highly structured and controlled. In today’s world of big data, advanced software can process unstructured data, and dynamically create its own structures to meet the task at hand. Structured data is like your checkbook, while unstructured data is like the pile of receipts that you’re hoping to sort out and make sense of someday.

With the advent of the iPhone in 2008, and services like Google, Facebook and Twitter, the amount of new data being created every instant is staggering and will only get bigger as more users participate. Traditional data centers simply cannot handle this constant avalanche of data.

What Is a Hyperscale Data Center?

The term “hyperscale computing” refers to the ability to “hyperscale” up or down as needed, quickly and massively. That is, the data center can quickly and cost-effectively add or reduce computing power.

The goal of scaling is to be able to add power quickly while the data center continues to run its tasks reliably. Scaling out, or horizontal scaling, refers to increasing the number of machines in the network. Scaling up, or vertical scaling, refers to adding additional computing power (memory, storage capacity and networking capabilities) to the machines in service. Being able to scale in both directions helps tech companies improve uptime and response time while handling ever-increasing workloads.

What really sets hyperscale computing apart from traditional data centers is that the massive ability to scale up and out. This means that theoretically, projects have no limits. A traditional data center runs hundreds of physical servers and thousands of virtual machines. (A virtual machine is an emulation of a single physical computer, a key ingredient in data center architecture.) In contrast, a hyperscale data center runs thousands of physical servers and millions of virtual machines.

While hyperscale computing is a creature of the cloud, not all of the cloud runs in hyperscale. In fact, most hyperscale data centers are owned by a handful of companies. At the end of 2017, there were nearly 400 hyper data centers in operation, with only 24 companies responsible for building and maintaining them. U.S. tech companies account for 44 percent of hyper data centers. Industry giants like Google, IBM, Amazon and Microsoft each have at least 45 data centers globally. The next largest country is China, with only eight percent of the market.

While there’s no specific definition of a hyperscale data center, International Data Corporation, a market intelligence firm, defines hyperscale computing as exceeding 5,000 servers and 10,000 square feet. But in fact, some hyperscale data centers house hundreds of thousands or even millions of servers, and are housed in huge buildings. The scale is illustrated by a Microsoft hyper center in Quincy, Washington, which has 24,000 miles of network cable, nearly enough to wrap around the equator – sweet dreams for cable and connectivity manufacturers, as well as assembly operations.

Data centers of this scale are expensive to build, but with enough automation, these centers are relatively inexpensive to run and maintain, pound for pound, when compared to traditional data centers.

Efficiency Through Automation

This focus on automation is about designing systems that heal themselves. For example, if a mouse gnawing on a wire disables a rack of servers, the system is designed to automatically scale up to replace that resource with a new one, with backed-up applications, services and data ready to go. What would take hours by hand in a traditional data center now happens in seconds or less. Similarly, if the system suddenly overloads with data and users, automated processes add the capacity to handle the load.

Unlike traditional data centers that employ a large full-time staff covering a variety of specialties, many hyperscale data centers employ fewer tech specialists because so much of the technology is automated.

It’s estimated that by the end of 2019, there will be about 500 hyperscale data centers online. Internet users can rest assured that as they find more reasons to interact with online resources, and as more services become available, hyperscale computing will be there to keep the experience reliable and seamless.

NAI Supports Hyperscale Computing

A related trend, and one that has been going on for decades is the miniaturization of electronics. Servers, switches and routers have become smaller, allowing more equipment to be densely packed in enclosures and racks. The challenge for installers is to work in these tight and dense spaces to hook up the jumpers and interconnects that are needed to connect equipment.

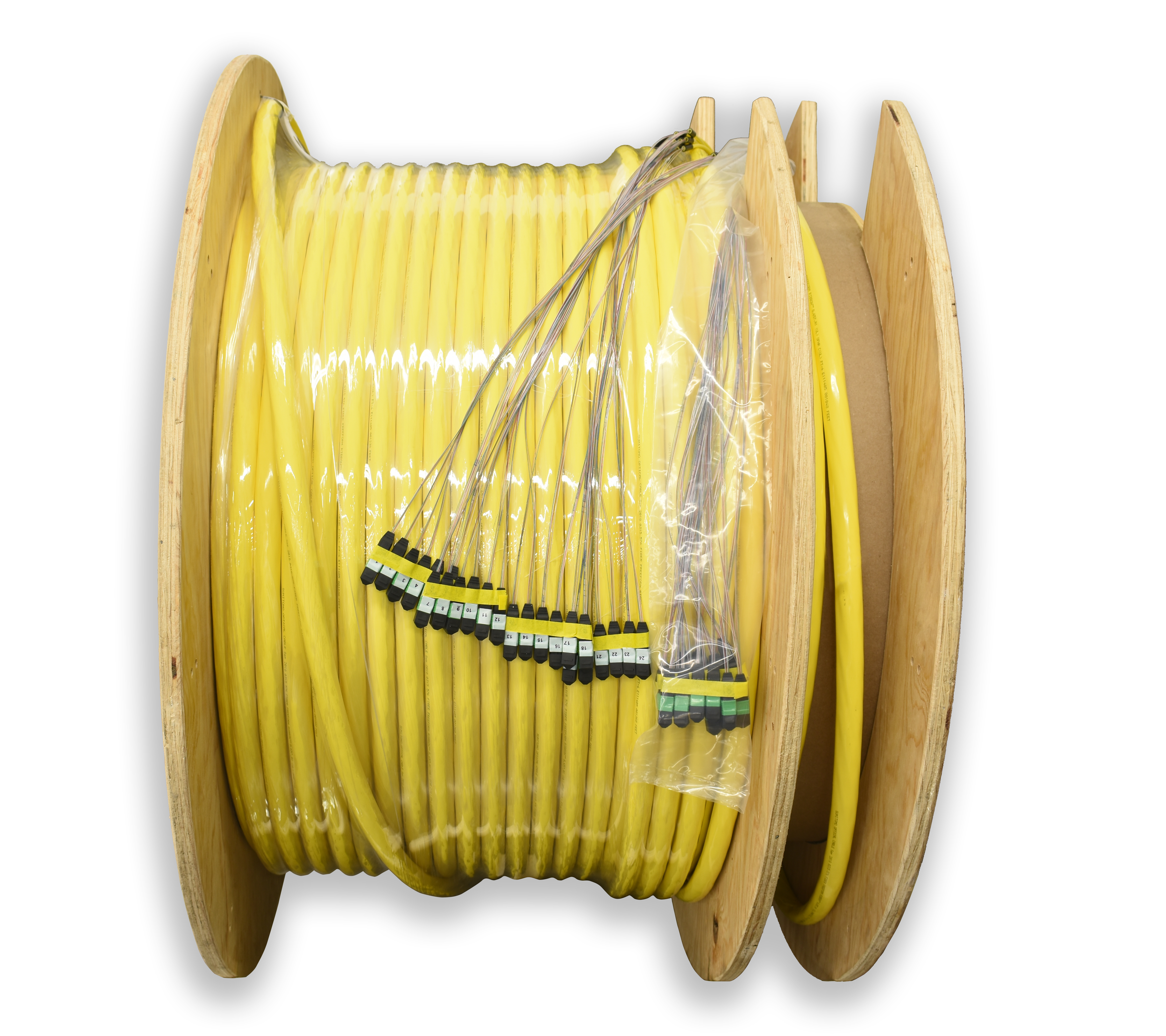

NAI has worked with companies in hyper centers to custom design pre-terminated fiber optic cable assemblies. Customers can order these with LC, MPO, or any other fiber optic connector they require. Pre-terminated assemblies (with connectors at one end) are delivered on crate reels with overall lengths determined by the customer. The installer can then cut the assemblies to the lengths needed in the field, and attach connectors at only one end.

Customized pre-terminated fiber optic cable assemblies are available with 288, 576 and 1,728 fibers. Assemblies with a 3,456 fiber count is a recent capability at NAI. Complete assemblies, connectorized at both ends are also available at NAI.